Improving the efficiency of mapreduce scheduling algorithm in hadoop

Product Description

Improving the efficiency of mapreduce scheduling algorithm in hadoop

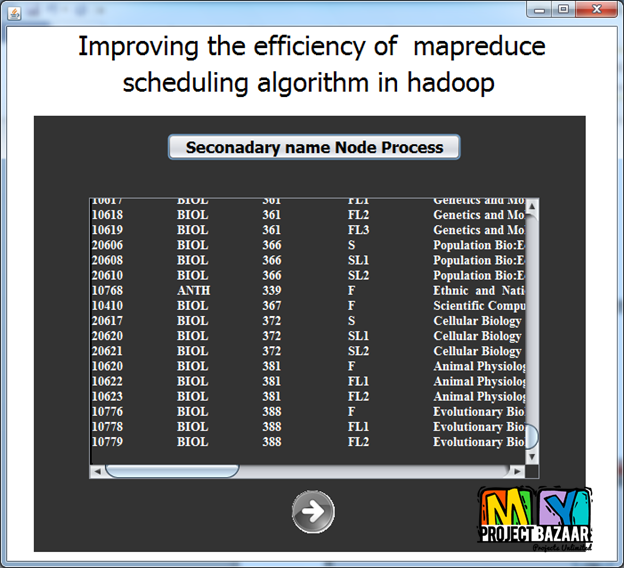

Abstract— Improving the efficiency of mapreduce scheduling algorithm in hadoop. Hadoop is a free java based programming framework that supports the processing of large datasets in a distributed computing environment. Mapreduce technique is being used in hadoop for processing and generating large datasets with a parallel distributed algorithm on a cluster.A key benefit of mapreduce is that it automatically handles failures and hides the complexity of fault tolerance from the user. Hadoop uses FIFO < Final Year Projects 2016 > FIRST IN FIRST OUT scheduling algorithm as default in which the jobs are executed in the order of their arrival. This method suits well for homogeneous cloud and results in poor performance on heterogeneous cloud. Later the LATE (Longest Approximate Time to End) algorithm has been developed which reduces the FIFO’s response time by a factor of 2.It gives better performance in heterogenous environment. LATE algorithm is based on three principles i) prioritising tasks to speculate ii) selecting fast nodes to run on iii) capping speculative tasks to prevent thrashing. It takes action on appropriate slow tasks and it could not compute the remaining time for tasks correctly and can’t find the real slow tasks. Finally a SAMR (Self Adaptive Map Reduce) scheduling algorithm is being introduced which can find slow tasks dynamically by using the historical information recorded on each node to tune parameters. SAMR reduces the execution time by 25% when compared with FIFO and 14% when compared with LATE.

Including Packages

Our Specialization

Support Service

Statistical Report

satisfied customers

3,589

Freelance projects

983

sales on Site

11,021

developers

175+Additional Information

| Domains | |

|---|---|

| Programming Language |