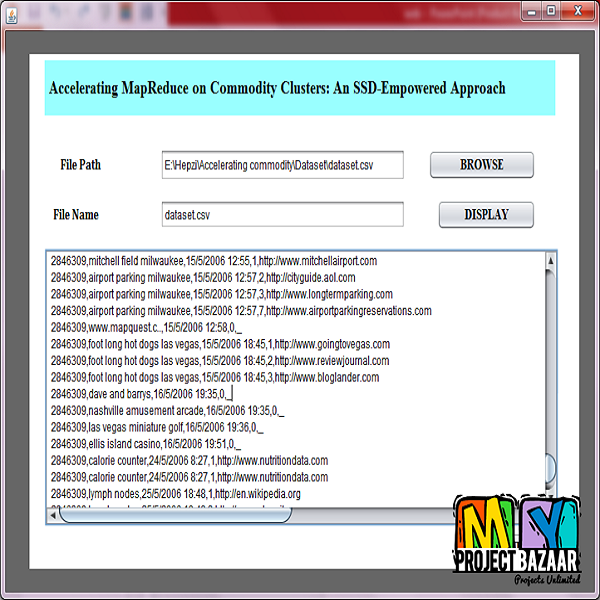

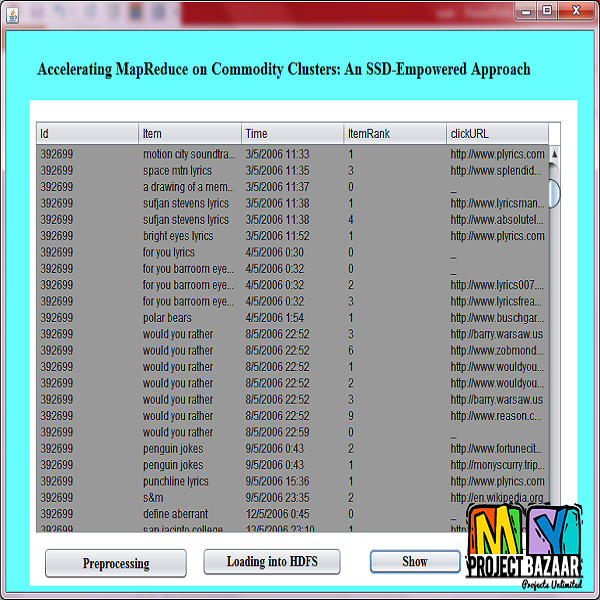

Accelerating MapReduce on Commodity Clusters: An SSD-Empowered Approach

Product Description

Accelerating MapReduce on Commodity

Clusters: An SSD-Empowered Approach

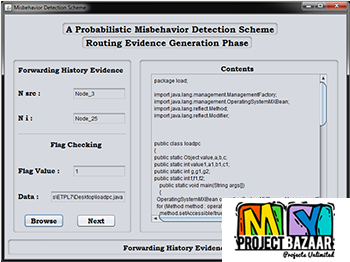

Abstract— MapReduce, as a programming model and implementation for processing large data sets on clusters with hundreds or thousands of nodes, has gained wide adoption. In spite of the fact, we found that MapReduce on commodity clusters, which are usually equipped with limited memory and hard-disk drive (HDD) and have processors

of multiple or many cores, does not scale as expected as the number of processor cores increases. The key reason for this is that the underlying low-speed HDD storage cannot meet the requirement of frequent IO operations. Though in-memory caching can improve IO, it is costly and sometimes cannot get the desired result either due to memory limitation. To deal with the problem and make MapReduce more scalable on commodity clusters, we present mpCache, a solution that utilizes solidstate drive (SSD) to cache input data and localized data of MapReduce tasks. In order to make a good trade-off between cost and performance, mpCache proposes ways to dynamically allocate the cache space between the input data and localized data and to do cache replacement. We have implemented mpCache in Hadoop and evaluated it on a 7-node commodity cluster by 13 benchmarks. The experimental results show that mpCache can gain an average speedup of 2.09x when compared with Hadoop, and can achieve an average speedup of 1.79x when compared

with PACMan, the latest in-memory optimization of MapReduce. < final year projects >

Including Packages

Our Specialization

Support Service

Statistical Report

satisfied customers

3,589

Freelance projects

983

sales on Site

11,021

developers

175+