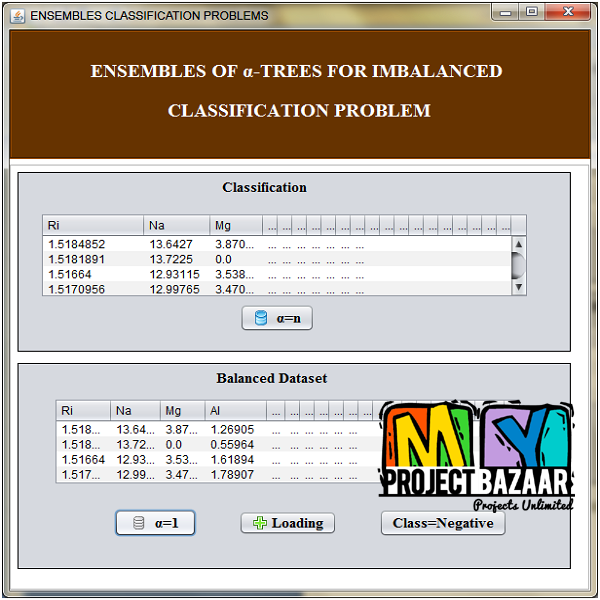

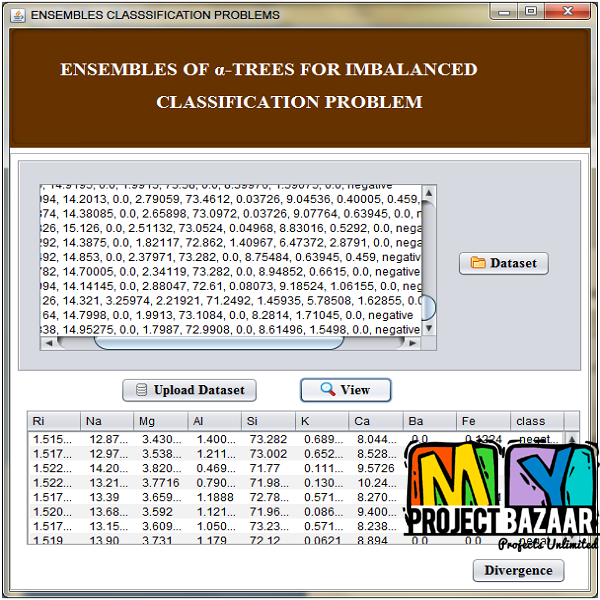

Ensembles of -Trees for Imbalanced Classification Problems

Product Description

Abstract— Ensembles of -Trees for Imbalanced Classification Problems. This paper introduces two kinds of decision tree ensembles for imbalanced classification problems, extensively utilizing properties of α-divergence. First, a novel splitting criterion based on α-divergence is shown to generalize several well-known splitting criteria such as those used in C4.5 and CART. When the α-divergence splitting criterion is applied to imbalanced data, one can obtain decision trees that tend to be less correlated (α-diversification) by varying the value of α. This increased diversity in an ensemble of such trees improves AUROC values across a range of minority class priors. The second ensemble uses the same alpha trees as base classifiers, < Final Year Projects > but uses a lift-aware stopping criterion during tree growth. The resultant ensemble produces a set of interpretable rules that provide higher lift values for a given coverage, a property that is much desirable in applications such as direct marketing. Experimental results across many class-imbalanced data sets, including BRFSS, and MIMIC data sets from the medical community and several sets from UCI and KEEL are provided to highlight the effectiveness of the proposed ensembles over a wide range of data distributions and of class imbalance.

Including Packages

Our Specialization

Support Service

Statistical Report

satisfied customers

3,589

Freelance projects

983

sales on Site

11,021

developers

175+

Additional Information

| Domains | |

|---|---|

| Programming Language |

Would you like to submit yours?

There are no reviews yet