Toward Optimal Feature Selection in Naive Bayes for Text Categorization

Product Description

Toward Optimal Feature Selection in Naive Bayes for Text Categorization

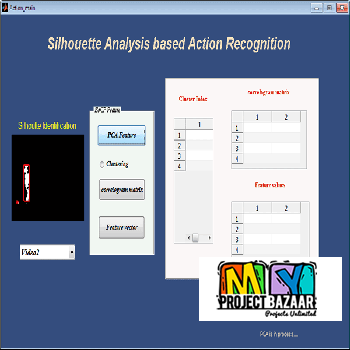

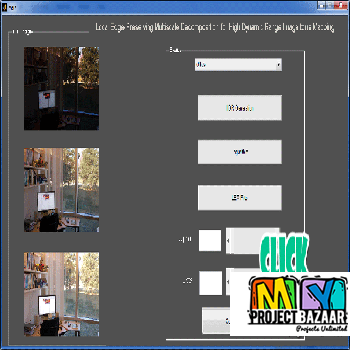

Abstract—Automated feature selection is important for text categorization to reduce the feature size and to speed up the learning process of classifiers. In this paper, we present a novel and efficient feature selection framework based on the Information Theory, which aims to rank the features with their discriminate capacity for classification.We first revisit two information measures: Kullback-Leibler divergence and Jeffreys divergence for binary hypothesis testing, and analyze their asymptotic

properties relating to type I and type II errors of a Bayesian classifier < final year projects >

Including Packages

Our Specialization

Support Service

Statistical Report

satisfied customers

3,589

Freelance projects

983

sales on Site

11,021

developers

175+