HDM: A Composable Framewor k for Big Data Processing

Product Description

HDM: A Composable Framewor k for Big Data Processing

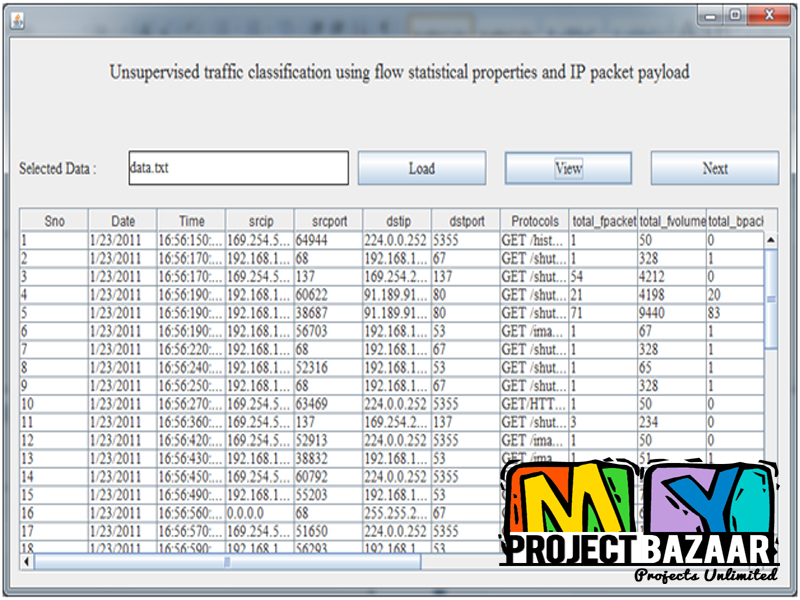

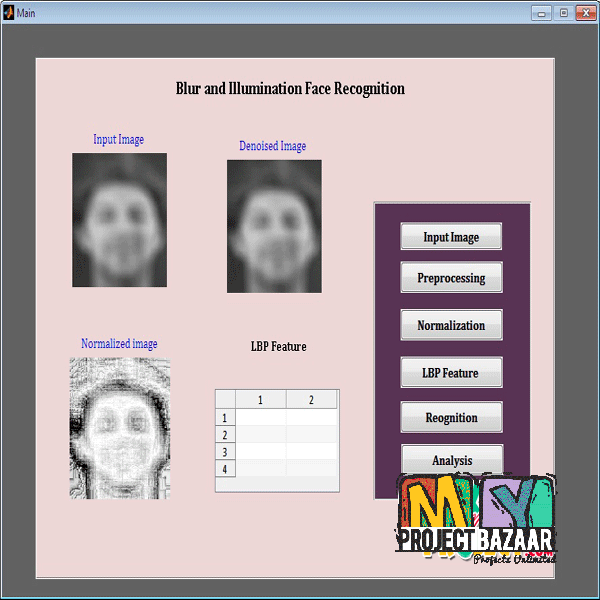

Abstract-Over the past years, frameworks such as MapReduce and Spark have been introduced to ease the task of developing bigdata programs and applications. However, the jobs in these frameworks are roughly defined and packaged as executable jars withoutany functionality being exposed or described. This means that deployed jobs are not natively composable and reusable for subsequentdevelopment. Besides, it also hampers the ability for applying optimizations on the data flow of job sequences and pipelines. In this paper, we present the Hierarchically Distributed Data Matrix (HDM) which is a functional, strongly-typed data representation for writingcomposable big data applications. Along with HDM, a runtime framework is provided to support the execution, integration and

management of HDM applications on distributed infrastructures. Based on the functional data dependency graph of HDM, multiple optimizations are applied to improve the performance of executing HDM jobs. The experimental results show that our optimizations can achieve improvements between 10% to 40% of the Job-Completion-Time for different types of applications when compared with the current state of art, Apache Spark.

Including Packages

Our Specialization

Support Service

Statistical Report

satisfied customers

3,589

Freelance projects

983

sales on Site

11,021

developers

175+

Copyright myprojectbazaar 2020